A 7x jump in market cap from $144B to $1T in just over 3 years – How did Nvidia do it?

Nvidia, the tech giant renowned for its graphic processing units, has been riding one of the largest bull runs in history, achieving a remarkable 7x surge in market capitalization from $144 billion to a staggering $1 trillion in a little over three years. But what propelled this astronomical growth? Let’s dissect Nvidia’s strategic playbook and uncover the secrets that fueled their unstoppable rise.

1. Gaming Dominance: Laying the Foundation

In 2020, Nvidia stood as a technological powerhouse, deeply rooted in the gaming industry. The company’s graphics processing units had already become a staple for gamers worldwide, forming the core of their success. In Q4 2020, Nvidia’s market share was a whopping 83% of the GPU market. Two key things helped them reach this massive position of dominance:

- Technology breakthroughs such as DLSS and Ray-Tracing

- Nvidia used it’s industry relations to drive the adoption of it’s exclusive software features of DLSS and RTX tied to their then latest Ampere architecture GPUs (3000 series) with leading gaming studios and marketed it really well. The key strategy here is – software innovation tightly coupled with hardware advancements, with a focus on making it easy for developers to onboard the technologies essentially creating a platform-based competitive moat

- Availability and price to performance ratio: Gamers basically need two things – the best performance for the dollars they’re paying and the GPUs to be available at retail prices. Despite a massive shortage in Nvidia GPUs due to the crypto mining boom, Nvidia availability in general has been better than AMD. This maybe because AMD had to meet their supply contract requirements with Sony and Microsoft for the console GPUs, and also had their manufacturing bandwidth split with their CPU demand. Nvidia’s 3000 series offered a significant leap in price to performance vs their last-gen 2000 series – basically twice the performance at half the price 🤷♂️

2. CUDA – More than Gaming

“In 2009, I remember giving a talk at NIPS [now NeurIPS] where I told about 1,000 researchers they should all buy GPUs because GPUs are going to be the future of machine learning,” – Geoffrey Hinton

Hinton was the Ph.D. supervisor of Ilya Sutskever – now cofounder and chief scientist at OpenAI, and Alex Krizhevsky creators of AlexNet, the pioneering neural network for computer vision which directly led to the next decade’s major AI success stories — everything from Google Photos, Google Translate and Uber to Alexa and AlphaFold.

The Platform Investment

- In a masterstroke of long-term strategy, Nvidia decided to build out CUDA – a parallel programming model which combined with the GPUs which makes it a full-stack computing platform. Nvidia poured resources into it over a 12-year old period making it immensely capable, and finally making it available to developers for free. The only catch? It works only with Nvidia GPUs

- Outcome – The parallel processing capabilities of Nvidia GPUs open up from gaming to general purpose computing. With a focus on capability development in growth sectors such as aerospace, bio-science research, mechanical and fluid simulations, energy exploration and most importantly – deep learning and machine learning powering the modern AI revolution. 4 million developers now work on CUDA and it has been downloaded over 40 million times. An incredibly capable platform offered for free, with a vendor lock-in and leveraging network effects to grow a developer network as a competitive moat? Everyone from Microsoft to Salesforce has used this tactic effectively to dominate their markets

3. The AI Boom

“NVIDIA’s Big AI Moment Is Here”

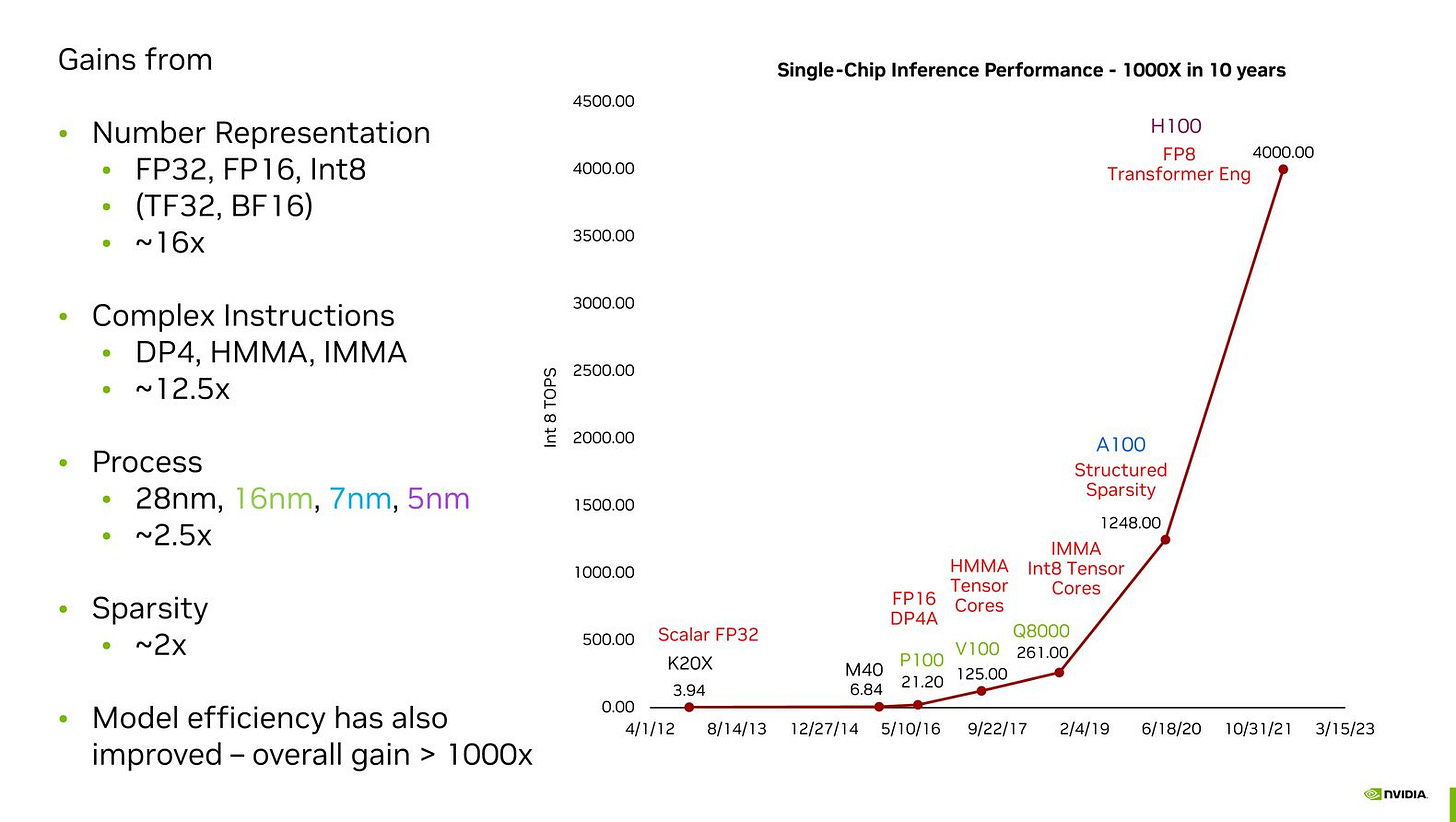

- We will not get into ChatGPT’s success here, but Nvidia is at it’s heart and reportedly more than 10,000 Nvidia GPUs were used to train it. The world cannot get enough of it’s flagship H100 GPUs optimized for generative AI workloads. This was summed up recently by Nvidia chief scientist Bill Dally with the below graph showing a 1000X jump in single-chip inference performance in 10 years:

- The H100 Demand Squeeze: TSMC is on track to deliver 550,000 H100 GPUs to Nvidia this year, with potential H100 revenues of $13.75 billion to $22 billion in 2023. With estimated margins of 60-90% per chip, Nvidia could make over $10 billion in profits from H100 sales this year alone. Oh and it’s sold out till well into 2024, leaving AI startups scrambling to get it from scalpers and resellers at several multiples of the retail price

- Multi-sector demand: Every sector needs GPUs now, allowing Nvidia to ride out seismic changes like the crypto collapse without slowing down

4. Accelerating Momentum -Extending the platform into the public cloud and specializing for sunrise sectors

- Partnership with cloud platforms – Deciding where not to compete is also essential to getting your strategy right. Nvidia understands the distribution strengths of the public cloud platforms. Hence to launch it’s new DGX Cloud, it has partnered with Microsoft Azure, Google Cloud Platform, and Oracle Cloud Infrastructure to instantly bring NVIDIA AI to nearly every company, from a browser

- Building platforms for every sector: Nvidia is building out specialized platforms focused on workflows for major sectors such as:

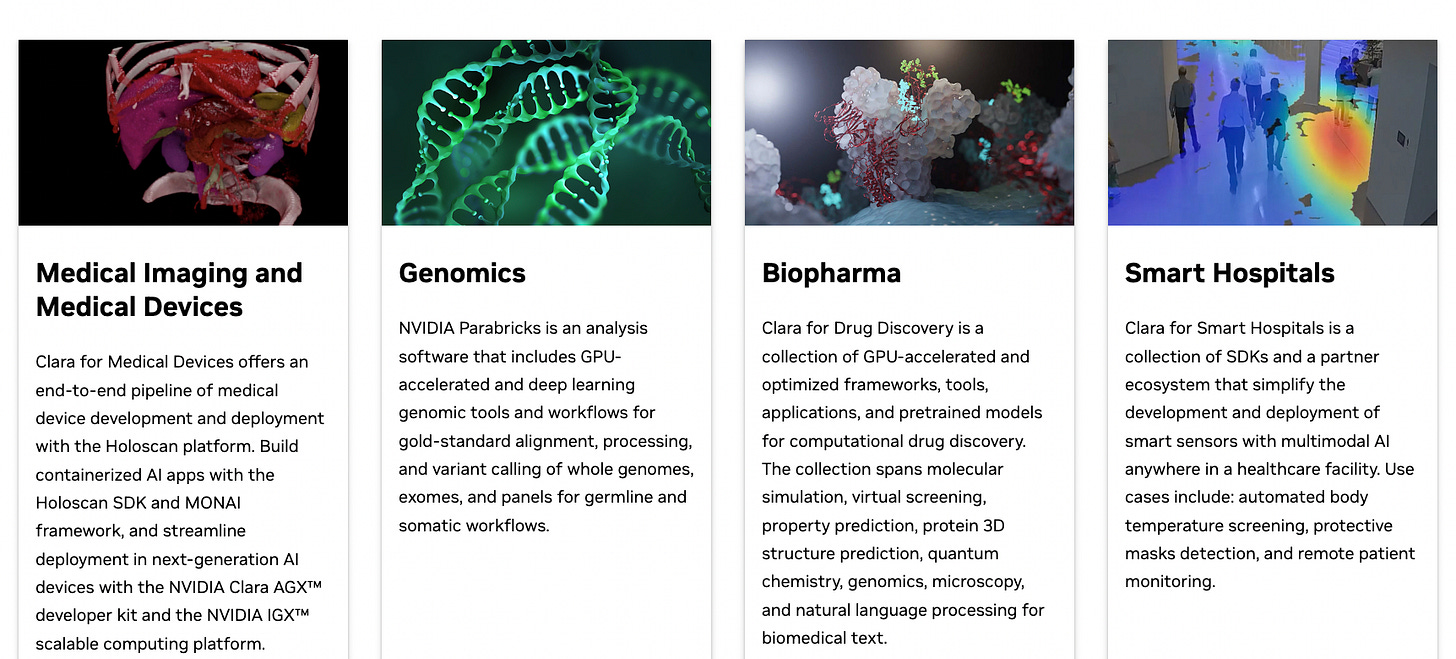

- Nvidia Clara for Healthcare

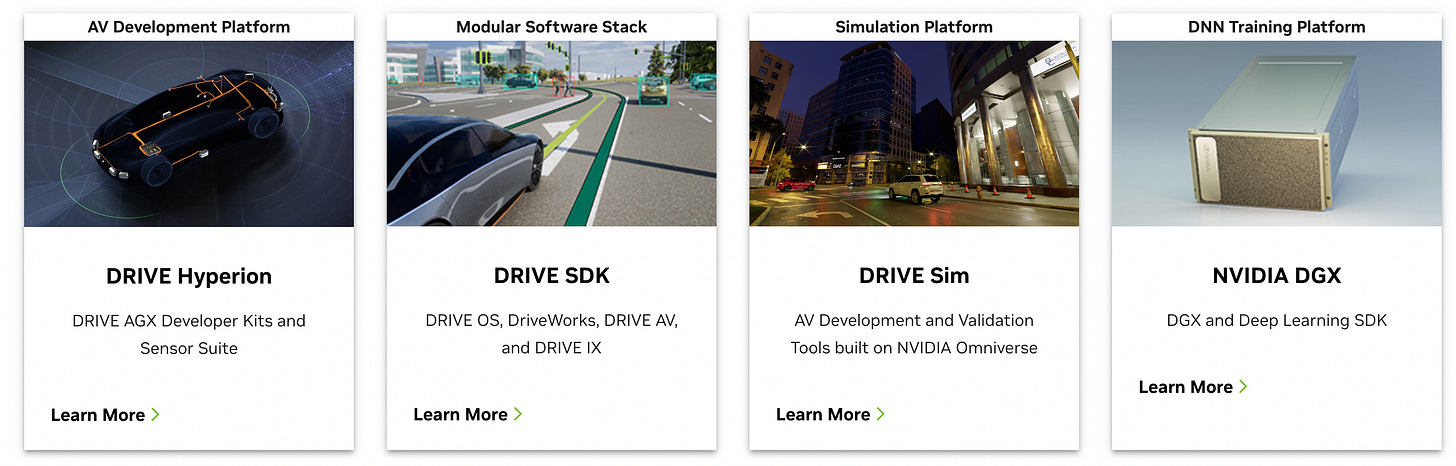

- Nvidia Drive for Automotive

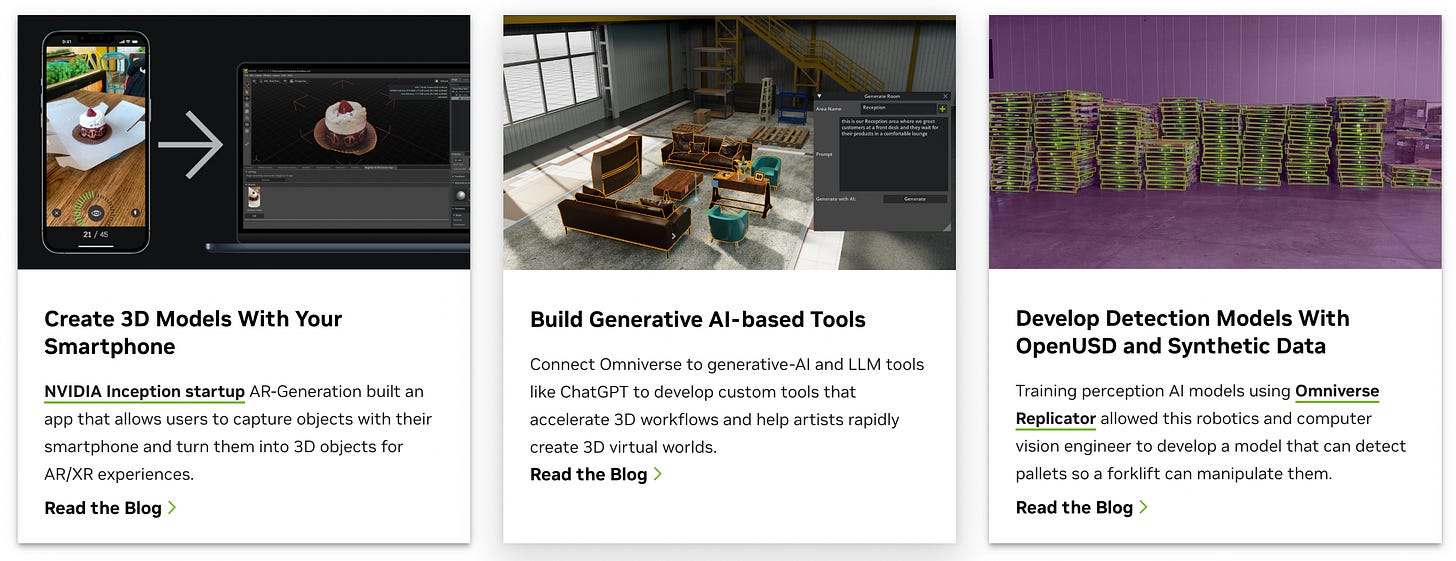

- Nvidia Omniverse for 3D-modelling

5. Continuing the momentum – Competitive threats

While Nvidia is on a high based on it’s long term bets working out so well, several competitors have taken notice and are preparing their own moves to capture value:

- Hyperscalers such as AWS and Google Cloud are investing in their own custom silicon: AWS, for which chip development has become a core component of its business, with its Graviton CPUs estimated to power one in five cloud instances on EC2. However, they’re not alone. Earlier this summer, Google launched its fifth-generation of AI/ML accelerators, which it calls a Tensor Processing Unit (TPU). Meanwhile, in China, Alibaba, Baidu, and Tencent are working on all manner of custom silicon from AI acceleration to data processing and even Arm CPUs

- AMD : Nvidia’s chip rivals aren’t sitting idle. AMD has announced it’s H100 competitor – the MI300 which CEO Lisa Su has claimed will be competitive with the H100 and possibly partnering with AWS.

- Breaking the CUDA moat: More open approaches such as OpenAI Triton and Meta’s Llama are a direct threat to Nvidia’s walled-garden approach with CUDA.In this rapidly evolving landscape, Nvidia’s continuous innovation and strategic agility will be crucial to navigate through the challenges and sustain their remarkable growth trajectory. The tech world watches with bated breath as Nvidia forges ahead, writing a new chapter in the story of tech dominance and evolution.